Deepseek with Ollama

Ollama is a platform that enables us to run large language models (LLMs) locally on our machine. It can be used to deploy DeepSeek, a powerful LLM capable of tasks like text generation, summarization, and conversational AI. To make interacting with DeepSeek more intuitive, OpenWebUI can be used to serve as the front-end, offering a user-friendly web interface for inputting queries and receiving responses.

Ollama

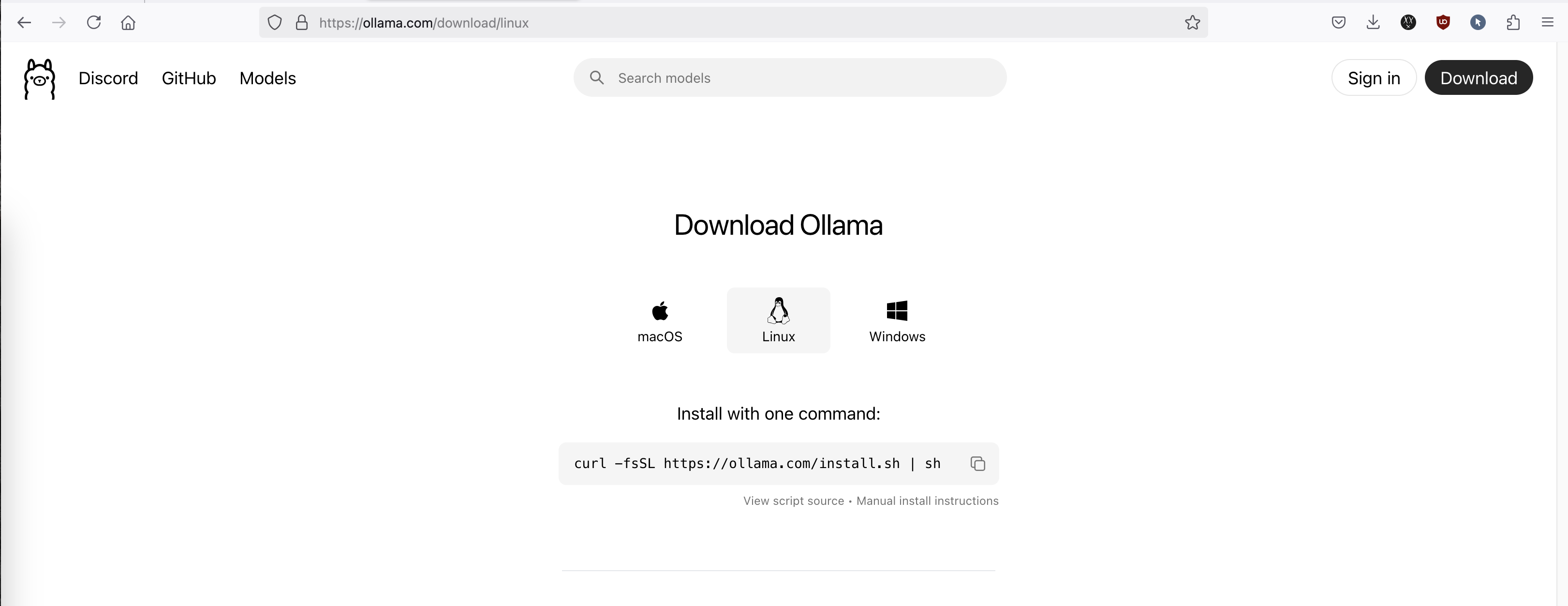

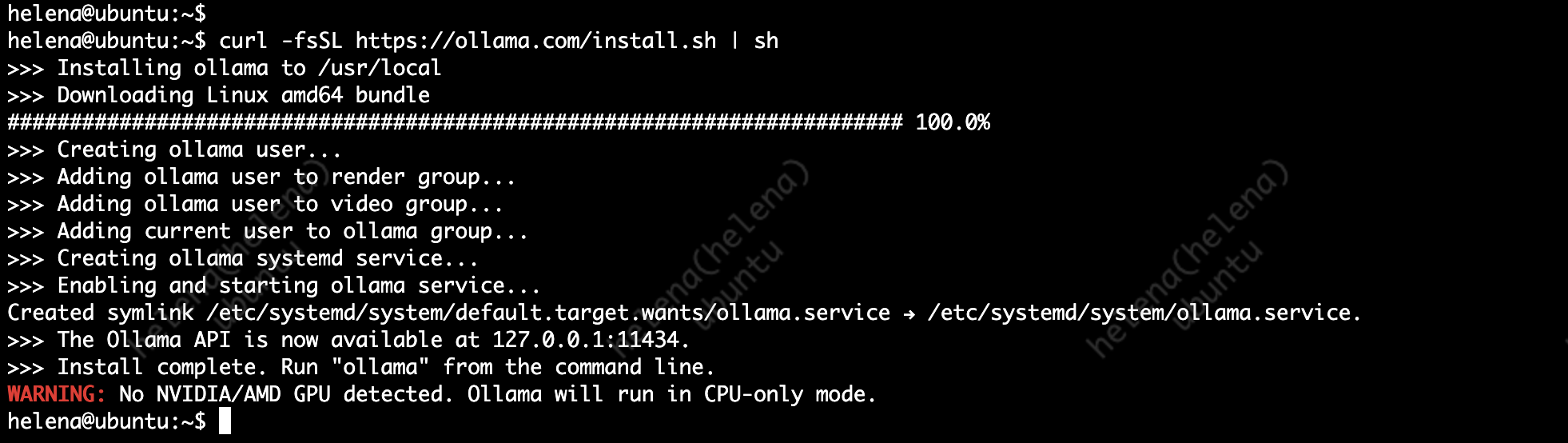

First lets download Ollama, here we’ll run it on Ubuntu Server

Run the command on the server

Deepseek

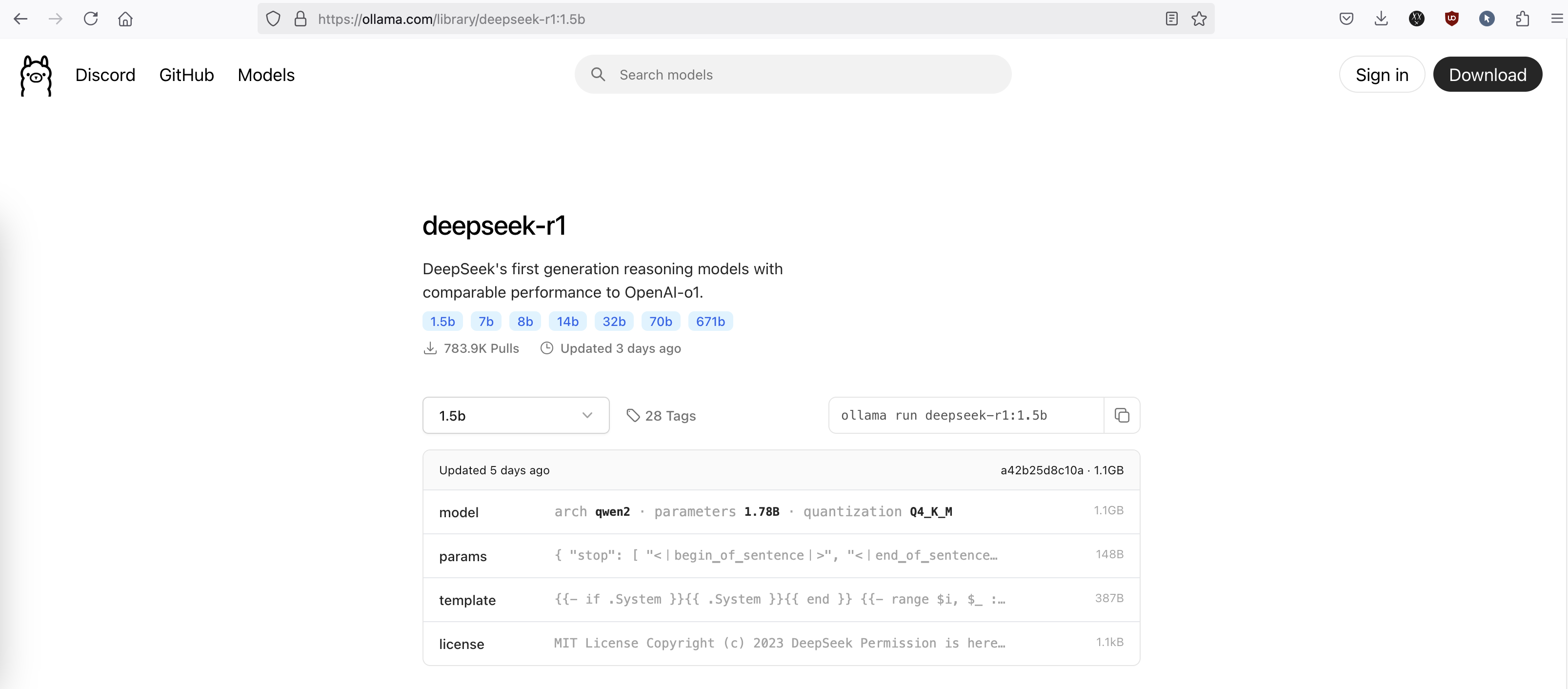

Now that Ollama is installed, we can proceed with getting the LLM to use, which in this case its DeepSeek

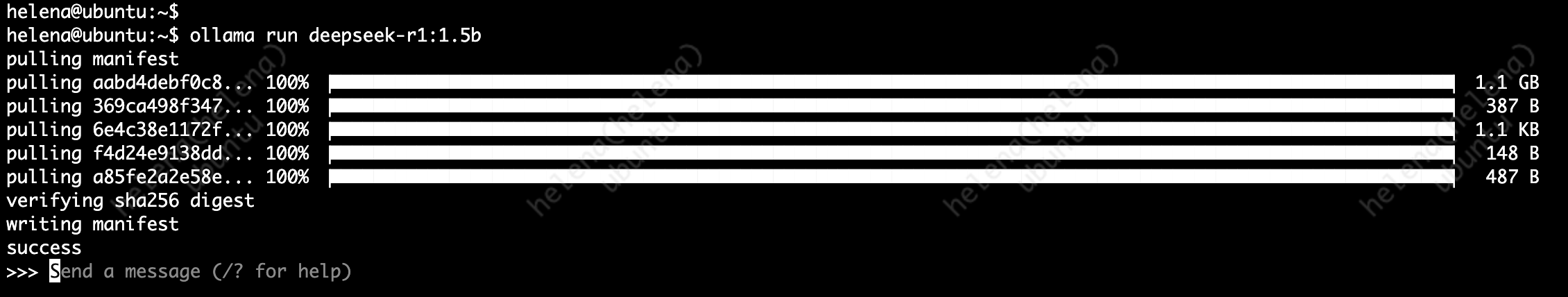

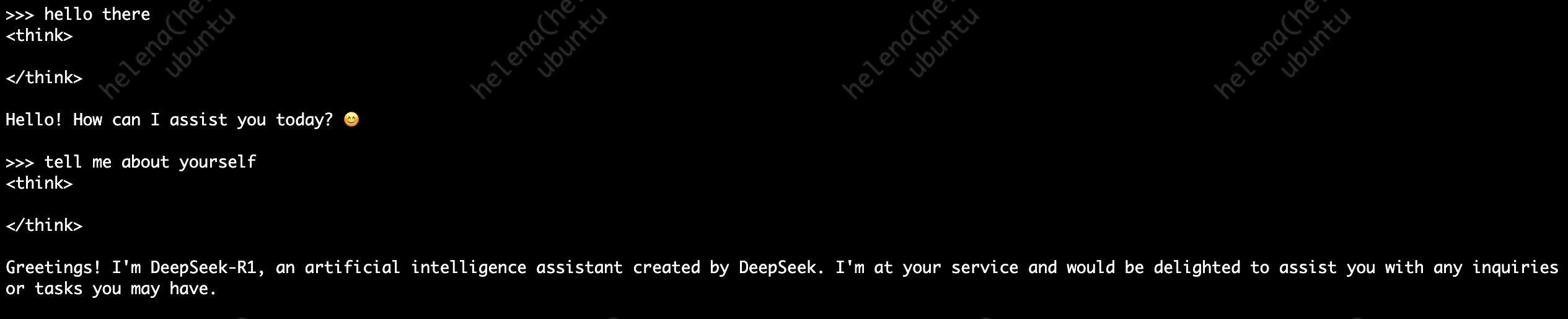

Run the “ollama run deepseek-r1:1.5b” on the server to pull the 1.5b model

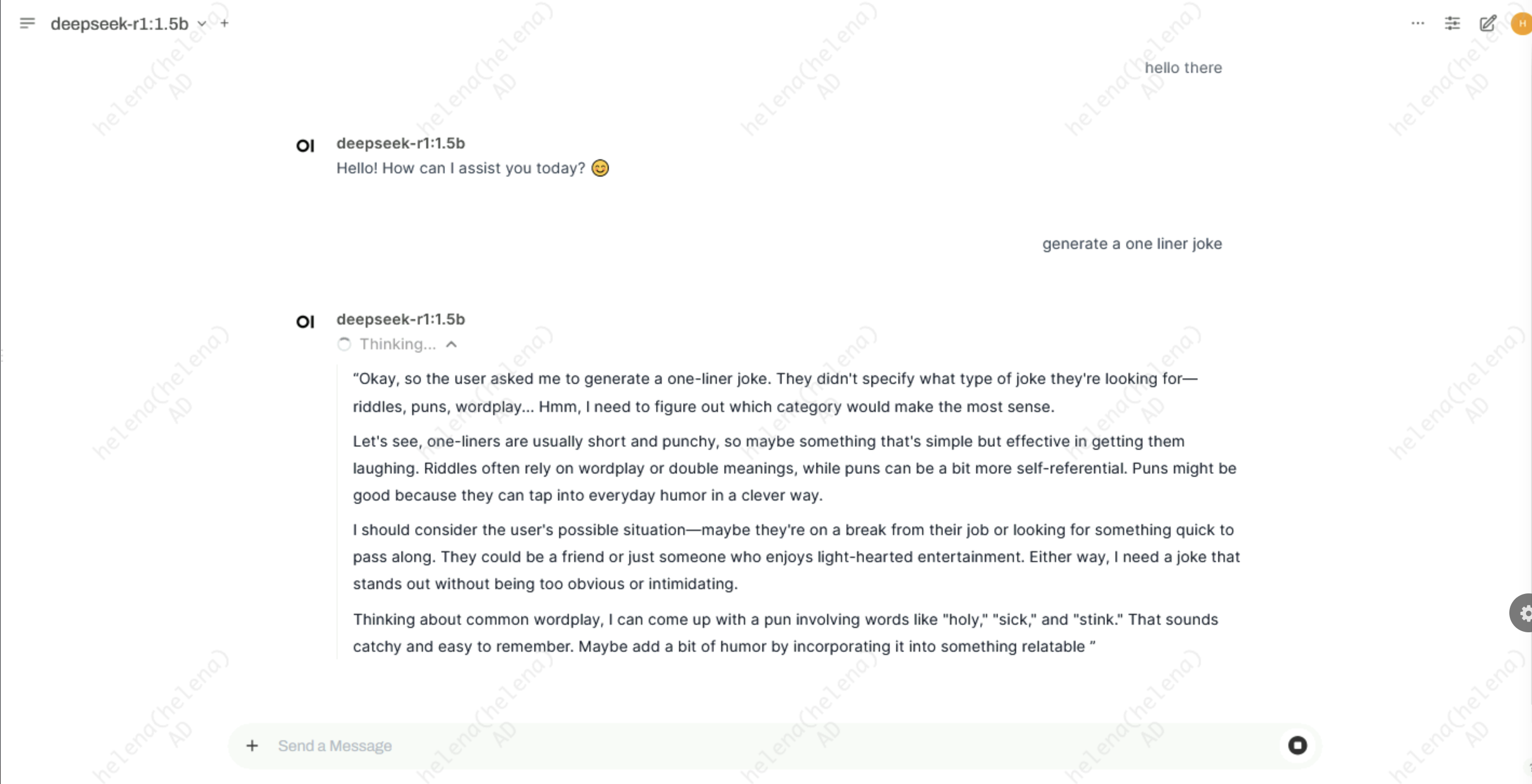

And thats pretty much it, now we can interact with this locally run model

running “/set verbose” we can see the performance that we’re getting on this machine, here we get around 6.11 tokens/second, which is not that great

Open WebUI

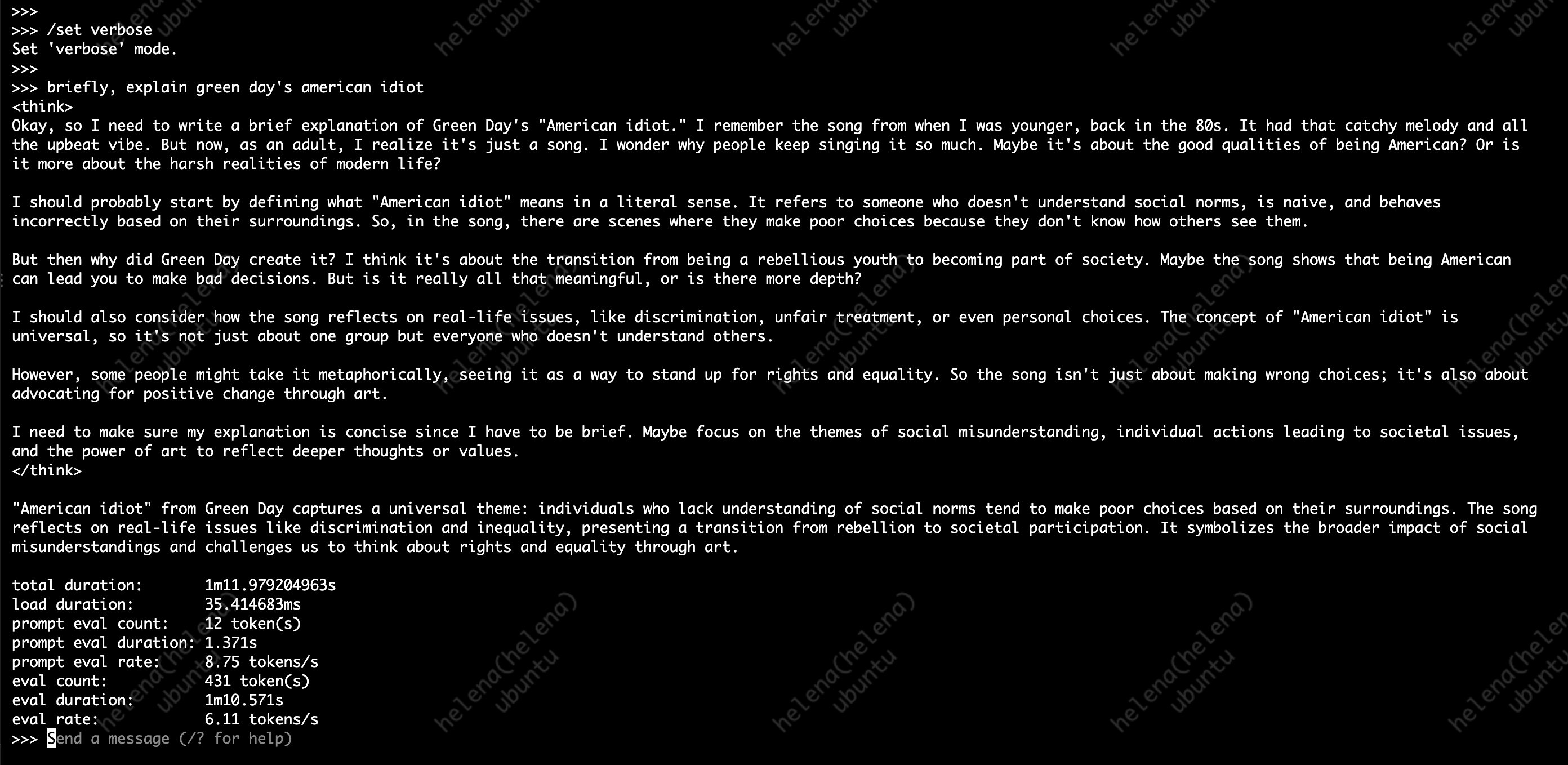

Now that the deepseek is running on our machine, lets install a front-end framework so we can access it using web browser. First install docker

Then open the Open WebUI github page and copy the docker command to pull it onto the local machine

Paste it on our ubuntu server and let the pulling runs

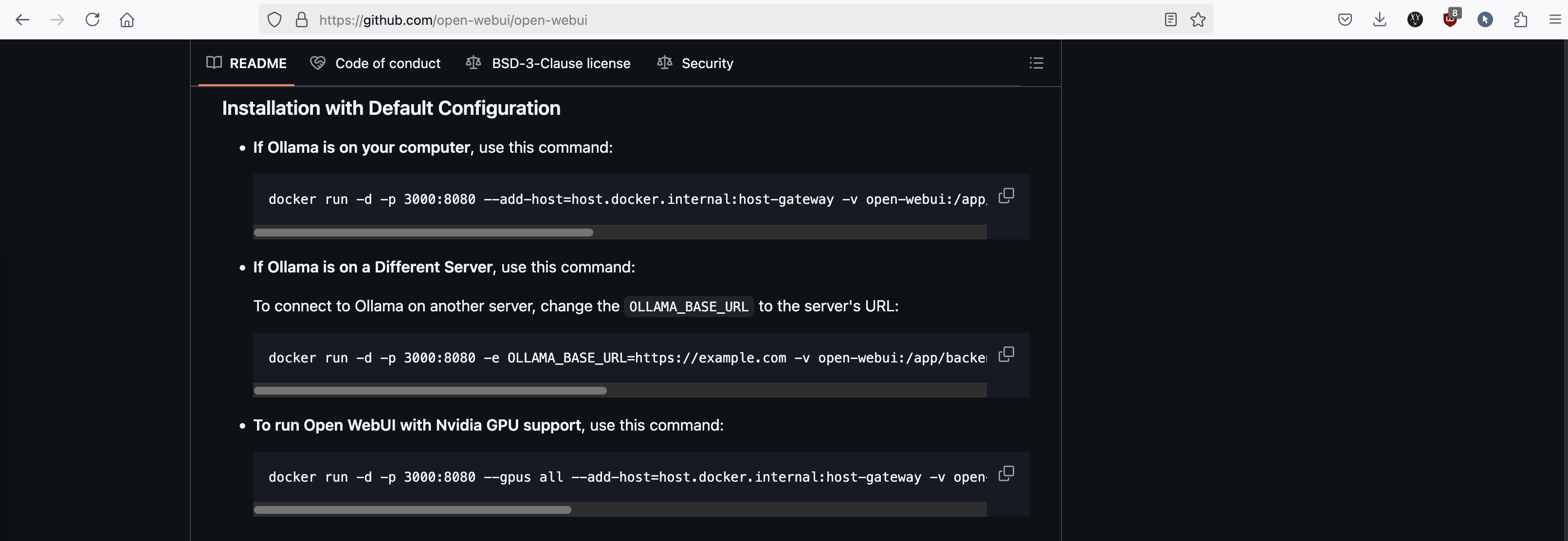

To allow ollama to be accessed outside the local machine, add this command to “/etc/systemd/system/ollama.service”

1

Environment"OLLAMA_HOST=0.0.0.0"

Then restart the ollama service

1

2

sudo systemctl daemon-reload

sudo systemctl restart ollama

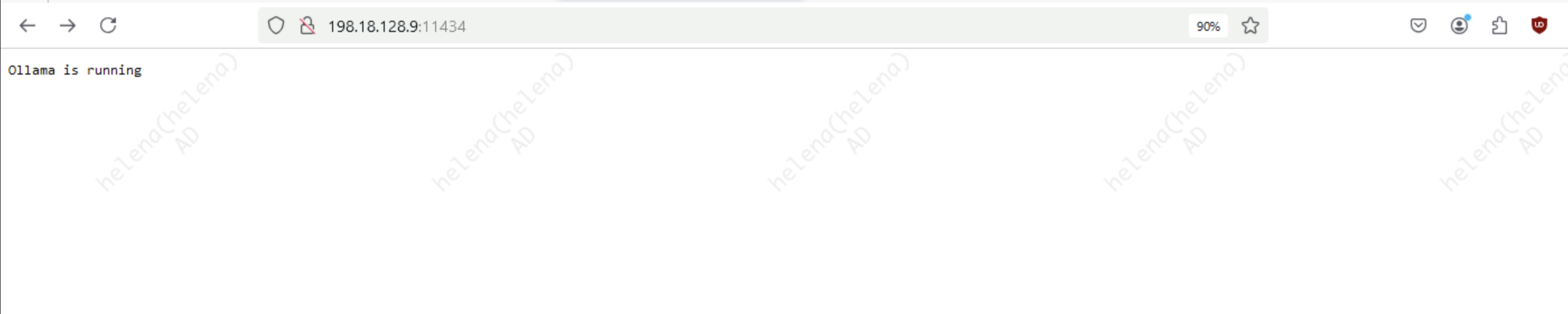

Access the port 11434 to make sure ollama is running and accessible

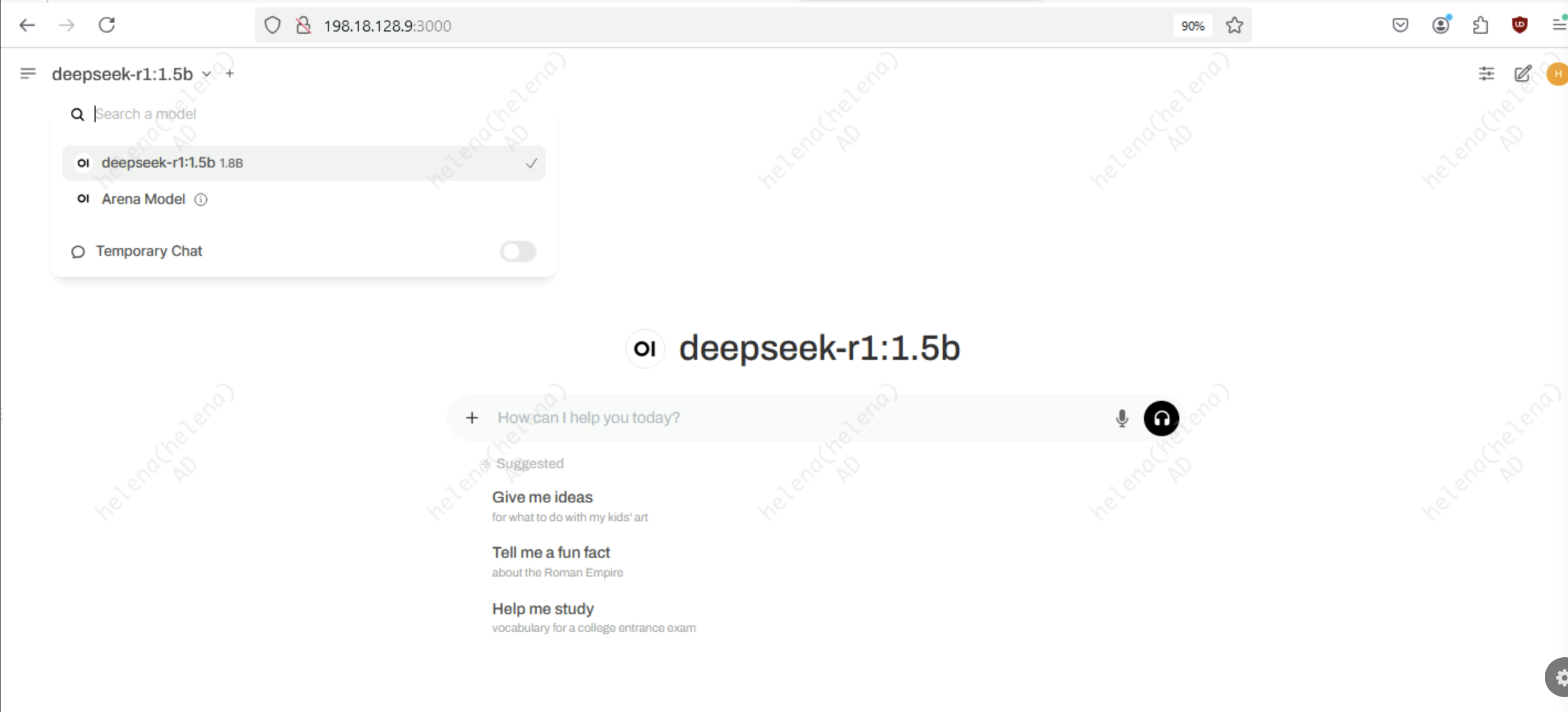

Now we can access Open WebUI on port 3000 and select Deepseek as the model